AWS Certified DevOps Engineer – Professional (DOP-C02)

Introduction

Welcome to AWS Certified DevOps Engineer – Professional (DOP-C02) Training

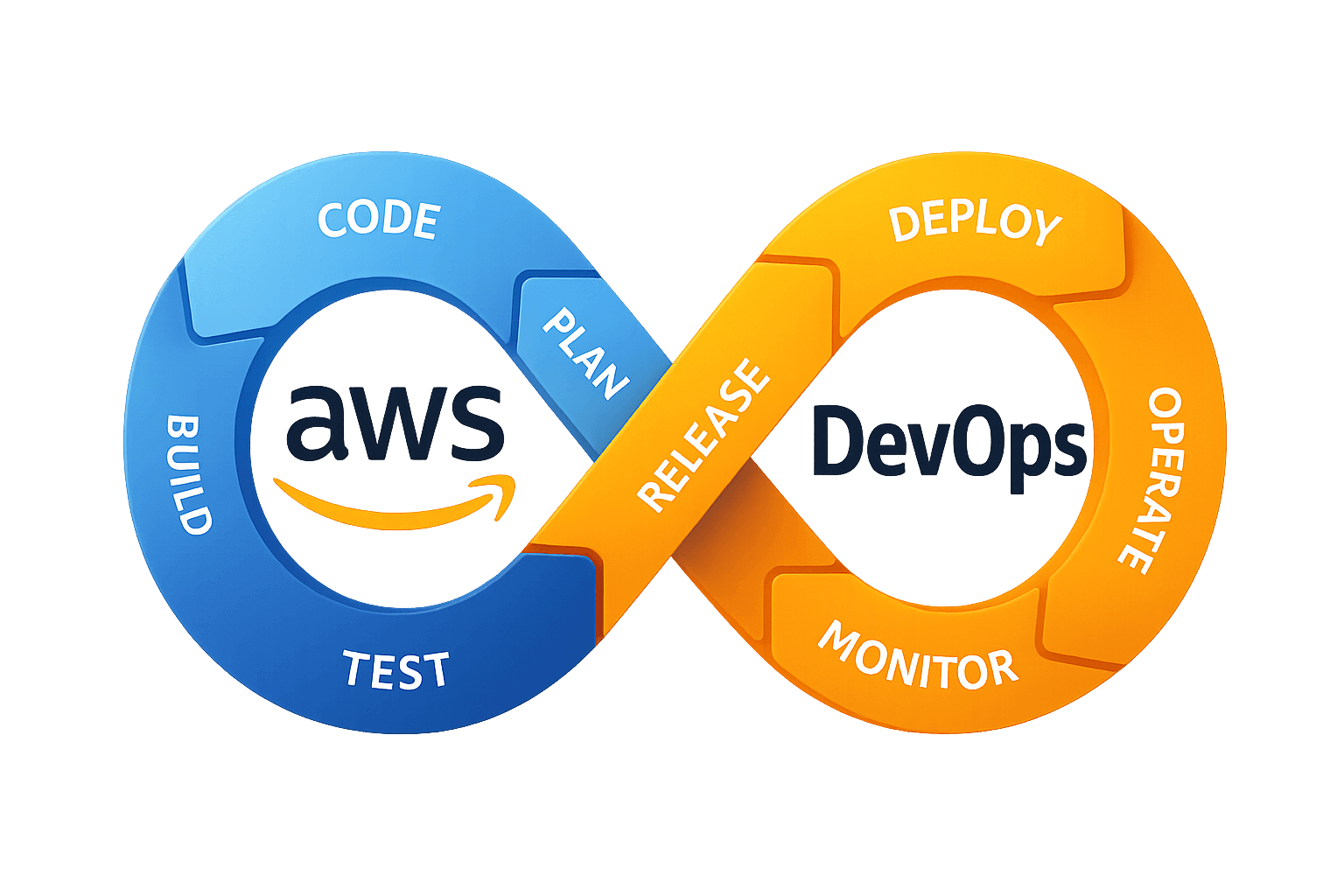

The AWS Certified DevOps Engineer – Professional (DOP-C02) is one of the most respected and challenging certifications in the cloud industry. It validates your expertise in implementing, managing, and automating end-to-end DevOps practices on AWS. In addition, it demonstrates your ability to build scalable, resilient, and secure CI/CD pipelines while leveraging AWS services that drive modern enterprises.

Why AWS DevOps Training Matters

Modern businesses demand faster software delivery, reliable systems, and continuous innovation. As a result, DevOps plays a crucial role by integrating automation, collaboration, and monitoring into every phase of the software lifecycle. The DOP-C02 certification proves your expertise in Continuous Integration (CI), Continuous Delivery (CD), Infrastructure as Code (IaC), observability, scaling, logging, and security automation. You will also gain practical experience using AWS services like AWS CodePipeline, AWS CodeBuild, AWS CloudFormation, Amazon CloudWatch, and Amazon EKS.

What You Gain from Our Training

At CloudTech Solutions, the AWS Certified DevOps Engineer – Professional (DOP-C02) training goes beyond exam preparation. Unlike standard courses, ours follows a job-ready, project-based approach with real-world scenarios, hands-on labs, and AWS best practices. This ensures you don’t just learn theory—you acquire the ability to deliver production-grade solutions. During this course, you will gain skills in the following areas:

- Designing and implementing automated CI/CD pipelines with CodePipeline, CodeBuild, and CodeDeploy

- Provisioning infrastructure with AWS CloudFormation, Terraform, and AWS CDK

- Deploying and scaling containers using Amazon ECS and EKS

- Monitoring workloads through CloudWatch, AWS X-Ray, and AWS Config

- Securing workflows with IAM, KMS, and Secrets Manager

- Automating compliance using AWS Config Rules and AWS CloudTrail

- Implementing blue/green and rolling deployments for zero downtime

- Building fault-tolerant and highly available DevOps pipelines

Outcome of the Training

By the end of this training, you will be ready to pass the AWS Certified DevOps Engineer – Professional (DOP-C02) exam. More importantly, you will graduate with the confidence to design, automate, and manage enterprise-scale DevOps solutions on AWS. This program ensures a complete package: Certification + Hands-on Projects + Interview Preparation = DevOps Job Success.

Syllabus

Syllabus

AWS Certified DevOps Engineer – Professional (DOP-C02) – Course Syllabus

The AWS Certified DevOps Engineer – Professional (DOP-C02) syllabus aligns with the official AWS DOP-C02 certification exam and outlines the practical modules you will master throughout the training journey at CloudTech Solutions.

Module 1: Introduction to AWS DevOps & Certification Path

- Understand the AWS DevOps Engineer Professional role and its relevance in today’s cloud landscape.

- Overview of AWS DevOps services and architecture.

- Explore exam domains and their alignment with job responsibilities.

- Create a free AWS account for hands-on labs.

- Setup IAM users, groups, roles, and policies for DevOps tasks.

- Explore AWS Developer Tools: CodeCommit, CodeBuild, CodeDeploy, CodePipeline.

Module 2: Source Control with Git & AWS CodeCommit

- Master Git-based workflows and source code collaboration on AWS.

- Initialize and manage repositories in AWS CodeCommit.

- Clone, push, pull, and manage branches using Git.

- Set up access permissions with IAM or Git credentials.

- Integrate CodeCommit with local development environments and pipelines.

- Enable notifications for commits and code reviews.

Module 3: Continuous Integration (CI) with AWS CodeBuild

- Understand the role of AWS CodeBuild in CI pipelines.

- Create and manage build projects.

- Write and customize

buildspec.ymlfiles for automation. - Run unit tests, code analysis, and compilation steps.

- Secure builds using IAM roles and environment variables.

Module 4: Continuous Delivery (CD) with AWS CodeDeploy

- Deploy applications to EC2, Lambda, or on-prem environments using AWS CodeDeploy.

- Understand in-place vs. blue/green deployment strategies.

- Use AppSpec files for deployment hooks and lifecycle events.

- Integrate health checks and automatic rollbacks.

- Monitor deployments and analyze logs for troubleshooting.

Module 5: End-to-End Pipelines with AWS CodePipeline

- Create fully automated, multi-stage CI/CD pipelines using AWS CodePipeline.

- Add stages for build, test, approval, and deployment.

- Integrate custom actions and third-party tools like GitHub or Jenkins.

- Trigger pipelines automatically based on Git pushes or S3 changes.

- Monitor pipeline executions, successes, and failures.

Module 6: Infrastructure as Code (IaC) with CloudFormation & Terraform

- Learn the principles of Infrastructure as Code (IaC) in AWS.

- Create and deploy stacks using AWS CloudFormation.

- Use nested stacks and parameters for reusable templates.

- Automate CloudFormation deployments via CodePipeline.

- Understand Terraform basics with AWS provider and real use cases.

- Compare CloudFormation and Terraform for production scenarios.

Module 7: Logging, Monitoring & Alerts in AWS DevOps

- Enable CloudWatch Logs for CodeBuild and Lambda.

- Use CloudWatch Metrics to track application and pipeline performance.

- Create custom dashboards and CloudWatch Alarms for proactive monitoring.

- Understand AWS X-Ray for microservices tracing.

- Configure SNS alerts for CI/CD pipeline notifications.

Module 8: DevOps Security, IAM & Secrets Management

- Manage DevOps permissions using IAM roles and policies.

- Secure secrets and credentials with AWS Secrets Manager.

- Store configuration parameters in Systems Manager Parameter Store.

- Inject secrets safely into CI/CD pipelines.

- Apply least-privilege principles across automation workflows.

Module 9: Containerized DevOps with ECS, ECR & Fargate

- Build Docker images and push to Amazon ECR.

- Set up ECS clusters using EC2 or Fargate launch types.

- Create task definitions and deploy services on ECS.

- Integrate CI/CD pipelines for container deployment.

- Enable auto-scaling and monitor container logs with CloudWatch.

Module 10: Kubernetes (EKS) and CI/CD Integration

- Understand Amazon EKS and Kubernetes fundamentals.

- Launch and configure an EKS cluster for microservices.

- Integrate CI/CD pipelines for Kubernetes apps using CodePipeline or GitHub Actions.

- Use Helm charts for packaging and deployment.

- Monitor cluster health with CloudWatch and Container Insights.

Module 11: Blue-Green & Canary Deployment Strategies on AWS

- Implement Blue-Green deployments using CodeDeploy.

- Automate slot swapping and traffic shifting for zero-downtime releases.

- Use Route 53 for weighted routing in canary deployments.

- Set up pre- and post-deployment validations to ensure safe releases.

- Manage rollback strategies and monitor performance for reliability.

Module 12: Exam Preparation, Mock Interviews & Resume Projects

- Gain tips and strategies for tackling the DOP-C02 certification exam.

- Practice with exam-style questions and review detailed solutions.

- Work on real-world AWS DevOps projects suitable for resumes.

- Participate in mock interview sessions with scenario-based Q&A.

- Get guidance on certification vouchers, career paths, and job readiness.

Target Audience

Target Audience

System Administrators & Developers Seeking a DevOps Transition

If you are working as a system administrator, developer, or operations engineer and wish to move into the DevOps domain, the AWS DevOps Engineer – Professional (DOP-C02) course is an ideal starting point. Through this training, you will gain hands-on experience in CI/CD pipelines, infrastructure automation, monitoring, and security workflows across AWS environments. As a result, you can smoothly transition from traditional IT roles into cloud-native DevOps practices.

Examples include:

- L1/L2 support engineers transitioning to DevOps roles.

- Developers aiming to automate deployments and manage AWS infrastructure.

- Operations or infrastructure engineers moving into Site Reliability Engineering (SRE) or cloud automation roles.

AWS Certified Associates (Solutions Architect / SysOps / Developer)

Professionals who have already obtained AWS Associate-level certifications can advance their careers with this program. In fact, this course helps you build on your existing knowledge and achieve a professional-level understanding of DevOps practices. Moreover, it strengthens your expertise in AWS-native DevOps tools while integrating best practices for automation, CI/CD, monitoring, and security.

You will learn to:

- Design and manage end-to-end CI/CD pipelines using CodeCommit, CodeBuild, CodeDeploy, and CodePipeline.

- Automate cloud provisioning with CloudFormation and Terraform.

- Secure and monitor applications using CloudWatch, AWS X-Ray, and AWS Secrets Manager.

Cloud Engineers & Automation Specialists

If you already work with cloud platforms and aim to scale up your automation skills, this course equips you with advanced DevOps expertise for modern delivery models. In addition, you will master containerized deployments, Kubernetes orchestration with EKS, and progressive release strategies such as Blue-Green and Canary deployments.

Ideal participants include:

- Cloud engineers focusing on AWS who want to automate deployments.

- Consultants or freelancers delivering end-to-end automation solutions.

- Solution architects integrating DevOps principles into infrastructure design.

Professionals Targeting Advanced DevOps Roles

This course is especially suitable for individuals aspiring to specialized DevOps roles on AWS. It empowers participants to confidently handle complex automation, enterprise-scale CI/CD pipelines, and cloud-native operations.

- AWS DevOps Engineer – Manages automation, CI/CD pipelines, and deployment orchestration across AWS services.

- Cloud Automation Engineer – Designs and implements infrastructure workflows with CloudFormation, Terraform, and AWS CLI.

- Site Reliability Engineer (SRE) – Ensures availability, performance, and scalability of applications in production.

- Build & Release Engineer – Oversees build systems, release pipelines, version control, and deployment strategies.

Other Suitable Candidates

In addition, the course benefits IT professionals who want to expand into cloud-native DevOps. It is equally useful for those seeking to strengthen AWS expertise while preparing for the DOP-C02 exam with real-world implementation.

- Upskill from traditional IT operations to AWS-based DevOps practices.

- Gain experience with Docker, ECS, Fargate, and Kubernetes (EKS) deployments.

- Advance career prospects in high-demand DevOps roles.

- Prepare simultaneously for AWS DOP-C02 certification and practical project work.

Learning Objectives

Learning Objectives

Learning Objectives – AWS DevOps Engineer Professional (DOP-C02)

By the end of the AWS Certified DevOps Engineer – Professional (DOP-C02) training, you will be able to design, implement, and manage complete DevOps processes on Amazon Web Services (AWS). The course focuses on building expertise in advanced CI/CD pipelines, infrastructure as code, automated deployments, monitoring, and cloud security.

As a result, you will not only be prepared for the certification exam but also gain the ability to apply cloud-native automation practices to real-world projects.

- Gain in-depth knowledge of AWS DevOps services such as AWS CodeCommit, CodeBuild, CodeDeploy, CodePipeline, CloudFormation, ECS, EKS, CloudWatch, and Secrets Manager, supported with practical scenarios.

- Design and implement end-to-end CI/CD pipelines for automated build, test, and deployment workflows in AWS environments.

- Apply security best practices using IAM roles and policies, Secrets Manager, KMS encryption, and least-privilege access for secure DevOps operations.

- Deploy and manage containerized applications on ECS and EKS, integrating pipelines for seamless container lifecycle management.

- Implement Infrastructure as Code (IaC) using AWS CloudFormation and Terraform for repeatable, version-controlled, and automated cloud resource provisioning.

- Configure robust monitoring, logging, and alerting with CloudWatch, CloudTrail, X-Ray, and SNS for proactive issue detection and resolution.

- Set up Blue-Green and Canary deployment strategies to reduce release risks and ensure high availability, leveraging AWS CodeDeploy and Route 53.

- Manage multi-account and multi-region deployments with cross-account roles, parameter stores, and automated pipelines for enterprise-grade scalability.

- Integrate third-party tools like GitHub, Jenkins, and Jira with AWS pipelines for end-to-end automation.

- Adopt cost-optimized and performance-efficient solutions for automated deployments, resource scaling, and operational excellence.

- Prepare thoroughly for the DOP-C02 certification exam with scenario-based learning, labs, and projects.

- Develop real-world job readiness by working on AWS DevOps projects covering CI/CD, monitoring, container orchestration, and automation workflows.

In conclusion, this training equips participants to become skilled AWS DevOps Engineers. Furthermore, it enables them to deliver secure, reliable, and scalable solutions while bridging development and operations in modern enterprise environments.

Pre-Requisites

Pre-Requisites

Pre-Requisites – AWS DevOps Engineer Professional (DOP-C02)

Before enrolling in the AWS Certified DevOps Engineer – Professional (DOP-C02) course, it is important to meet certain prerequisites. These requirements ensure a smoother learning journey and help you better understand advanced DevOps concepts. In fact, prior exposure to cloud computing and basic DevOps practices creates a strong foundation for success.

- Have a basic understanding of Amazon Web Services (AWS) core services. This will make it easier to navigate and manage cloud resources effectively.

- Gain familiarity with software development, system administration, or IT operations. These skills complement DevOps practices and accelerate your progress.

- Understand CI/CD concepts and DevOps workflows. This knowledge will help you adopt automated pipelines with confidence.

- Work with version control tools such as Git and repository platforms like AWS CodeCommit. For example, practicing commits, merges, and pull requests will strengthen your foundation.

- (Recommended) Complete an AWS foundational or associate-level certification. Options include AWS Certified Solutions Architect – Associate, AWS Certified Developer – Associate, or AWS Certified SysOps Administrator – Associate. These prove your ability to manage and deploy AWS resources effectively.

Ultimately, fulfilling these prerequisites will prepare you to tackle the advanced concepts covered in the AWS Certified DevOps Engineer – Professional (DOP-C02) course. If you need to strengthen your fundamentals first, explore our AWS training programs. They serve as an excellent stepping stone toward professional-level DevOps expertise.

Projects

Projects

Project 1: CI/CD Pipeline for Python Application on EC2 using CodePipeline

Scenario: Your team is building a Python-based REST API. The goal is to deploy it automatically across Dev, Test, and Production environments using AWS DevOps services.

What You Will Do:

- Set up source code management with AWS CodeCommit

- Configure builds, linting, and tests using AWS CodeBuild

- Design a multi-stage CodePipeline for CI/CD

- Deploy to Amazon EC2 using AWS CodeDeploy

- Implement environment-wise approvals and rollback strategies

Skills Gained:

- End-to-end CI/CD pipeline design

- Deployment automation with AWS developer tools

- EC2 release management

- Rollback and approval workflows

Project 2: Infrastructure as Code (IaC) with AWS CloudFormation and Terraform

Scenario: A web application needs a scalable architecture. You must automate provisioning using Infrastructure as Code (IaC) to create a Load Balancer, EC2 Auto Scaling, and RDS database.

What You Will Do:

- Write CloudFormation templates to provision:

- VPC, Subnets, and Security Groups

- EC2 Auto Scaling Group with a Load Balancer

- RDS MySQL with backup and failover

- Use Terraform to replicate the same setup

- Automate stack deployment using CodePipeline with CloudFormation

Skills Gained:

- CloudFormation scripting and automation

- Terraform provisioning with AWS provider

- IaC deployment in production pipelines

- Cross-environment automation

Project 3: Secure CI/CD Pipeline with AWS Secrets Manager & IAM

Scenario: In production environments, plain text credentials are not acceptable. You must secure your CI/CD workflows.

What You Will Do:

- Store database credentials and API keys in AWS Secrets Manager

- Inject secrets dynamically into CodeBuild and CodeDeploy pipelines

- Set up IAM roles and policies with least-privilege principles

- Audit pipeline actions with AWS CloudTrail

Skills Gained:

- Secrets management best practices

- IAM policies for DevOps workflows

- Secure CI/CD environment variables

- Compliance-ready pipelines

Project 4: Blue-Green Deployment for Node.js App on AWS Elastic Beanstalk

Scenario: A startup needs zero-downtime updates for its customer-facing Node.js application. You must use Blue-Green deployments.

What You Will Do:

- Deploy the application using AWS Elastic Beanstalk

- Create Blue and Green environments with identical configurations

- Switch traffic between environments using CodePipeline

- Monitor app health and perform rollbacks if needed

Skills Gained:

- Elastic Beanstalk deployment automation

- Blue-Green release strategies

- Validation and rollback techniques

- Integration with CI/CD pipelines

Project 5: Canary Deployment for Container App on ECS + ALB

Scenario: A FinTech company wants to test new features without affecting all users. Canary deployments allow gradual rollout in production.

What You Will Do:

- Build and push Docker images to Amazon ECR

- Deploy containers on Amazon ECS (Fargate) behind an ALB

- Route 10% of traffic to the new version using canary weights

- Monitor metrics with CloudWatch alarms

- Rollback automatically if failures exceed thresholds

Skills Gained:

- Canary deployment on ECS

- ALB traffic shifting

- Progressive microservice rollouts

- Monitoring and rollback automation

Project 6: Centralized Monitoring & Alerts with CloudWatch Dashboards

Scenario: Enterprises need visibility into build and deployment health. You will create dashboards and alerts for stakeholders.

What You Will Do:

- Enable CloudWatch Logs and Metrics for EC2, ECS, and CodeBuild

- Design real-time dashboards for builds, deployments, and error rates

- Set alerts using Amazon SNS

- Trace applications with AWS X-Ray

Skills Gained:

- CloudWatch observability

- Operational dashboards

- Automated alerting with SNS

- Application tracing with X-Ray

Project 7: GitHub Actions to Trigger AWS CI/CD Pipelines

Scenario: Your organization uses GitHub for source control. You must integrate it with AWS pipelines for deployments.

What You Will Do:

- Write GitHub Actions workflows for AWS deployments

- Upload artifacts to S3 and trigger CodePipeline runs

- Automate Docker image builds and push to ECR

- Secure GitHub credentials with OpenID Connect (OIDC) and IAM roles

Skills Gained:

- GitHub to AWS CI/CD integration

- Workflow automation with GitHub Actions

- Cross-platform DevOps orchestration

- GitOps-style delivery

Why These Projects Matter

- Directly aligned with DOP-C02 exam domains

- Reflect real challenges faced by AWS DevOps Engineers

- Prepare you for scenario-based interview questions

- Boost your resume, LinkedIn profile, and GitHub portfolio

Labs

Labs

Hands-On Labs – AWS DevOps Engineer Professional (DOP-C02)

These hands-on labs immerse you in AWS DevOps practices. Each lab provides step-by-step instructions for automation, CI/CD pipelines, deployments, monitoring, and security. They reflect real-world scenarios so you gain job-ready expertise while preparing for the AWS DevOps Engineer Professional (DOP-C02) exam.

Lab 1: Setting Up Your AWS DevOps Environment

Objective: Configure your AWS environment securely to start your DevOps journey.

Step-by-Step:

- Create your AWS Free Tier account.

- Set up IAM roles, groups, and policies.

- Launch AWS CloudShell for CLI-based tasks.

- Enable billing alerts and CloudTrail logging.

- Explore AWS Developer Tools in the console.

Lab 2: Git Version Control with CodeCommit

Objective: Manage source code with Git and AWS CodeCommit.

Step-by-Step:

- Initialize a local Git repo and push it to AWS CodeCommit.

- Create feature branches and merge using pull requests.

- Configure IAM Git credentials or SSH keys.

- Enable notifications for commits or pull requests.

- Integrate CodeCommit with AWS CodePipeline.

Lab 3: Building CI Pipelines with CodeBuild

Objective: Automate the build process with AWS CodeBuild.

Step-by-Step:

- Create a CodeBuild project for a sample application.

- Write and configure a

buildspec.ymlfile. - Add build phases: install, pre_build, build, post_build.

- Run unit tests and generate build artifacts.

- Secure builds using IAM roles and environment variables.

Lab 4: Creating CI/CD Pipeline with CodePipeline

Objective: Build a complete CI/CD workflow with multiple stages.

Step-by-Step:

- Create a CodePipeline with Source, Build, and Deploy stages.

- Add manual approval for the staging stage.

- Monitor pipeline execution and configure failure alerts.

- Trigger the pipeline on every push to the main branch.

Lab 5: Deploying Applications to EC2 with CodeDeploy

Objective: Deploy applications automatically to EC2 instances.

Step-by-Step:

- Install and configure the CodeDeploy agent on EC2.

- Create and upload an AppSpec file.

- Register EC2 instances in deployment groups.

- Deploy via CodePipeline and validate the application status.

- Simulate failures and test rollback functionality.

Lab 6: Blue-Green Deployment with Elastic Beanstalk

Objective: Enable zero-downtime deployments using Blue-Green environments.

Step-by-Step:

- Create Elastic Beanstalk environments (Blue and Green).

- Deploy the app version to the Green environment.

- Test and validate the Green environment.

- Swap environments to route traffic to Green.

- Rollback if necessary using swap or version history.

Lab 7: Canary Deployment Using ECS & ALB

Objective: Roll out new features gradually using containers.

Step-by-Step:

- Deploy two versions of a containerized app in Amazon ECS.

- Register both versions behind an Application Load Balancer.

- Set traffic weighting: 90% to old, 10% to new.

- Monitor logs, latency, and errors with Amazon CloudWatch.

- Gradually increase traffic if performance is stable.

Lab 8: Secrets Management with AWS Secrets Manager

Objective: Securely manage secrets in your CI/CD process.

Step-by-Step:

- Create secrets such as database passwords in AWS Secrets Manager.

- Assign fine-grained IAM access for build and deploy services.

- Reference secrets in your

buildspec.yml. - Ensure secrets do not appear in logs.

- Rotate and audit secret access regularly.

Lab 9: Infrastructure Provisioning with CloudFormation

Objective: Use CloudFormation for repeatable infrastructure provisioning.

Step-by-Step:

- Write CloudFormation templates for VPC, Subnets, EC2, S3, and RDS.

- Parameterize stacks for Dev, Test, and Prod.

- Launch, update, and delete stacks.

- Add stack deployment to CodePipeline.

- Monitor deployment with CloudFormation events and rollback options.

Lab 10: Terraform-Based AWS Deployment

Objective: Automate AWS infrastructure using Terraform.

Step-by-Step:

- Install Terraform and configure AWS provider.

- Write reusable modules for EC2, S3, and IAM.

- Run

terraform init,plan, andapply. - Destroy resources using

terraform destroy. - Automate Terraform with AWS CodeBuild.

Lab 11: Monitoring & Dashboards with CloudWatch

Objective: Monitor DevOps health and create custom dashboards.

Step-by-Step:

- Enable and collect logs from CodeBuild, ECS, and EC2.

- Create custom dashboards in Amazon CloudWatch.

- Configure alarms and notifications via SNS.

- Trace request latency with AWS X-Ray.

- Build a monitoring panel for pipeline and app health.

Lab 12: GitHub Actions to AWS CI/CD Integration

Objective: Trigger AWS deployments from GitHub workflows.

Step-by-Step:

- Configure your repo with GitHub Actions.

- Create a

.github/workflows/deploy.ymlpipeline. - Authenticate using OIDC and IAM roles.

- Deploy Docker apps to ECS.

- Enable status checks and Slack notifications.

Lab 13: Managing Pipeline Variables and Parameters

Objective: Manage secure and reusable pipeline variables.

Step-by-Step:

- Define environment variables in CodeBuild and CodePipeline.

- Pass parameters between pipeline stages.

- Store configs in Parameter Store.

- Create cross-environment variable groups.

- Test variable overrides for Dev, Test, and Prod.

Lab 14: Advanced CI/CD with Lambda Deployment

Objective: Automate deployments for serverless functions.

Step-by-Step:

- Write and test a Lambda function.

- Package and deploy using CodePipeline.

- Automate versioning and aliasing.

- Include a testing stage before production release.

- Monitor with CloudWatch Lambda metrics.

Lab 15: Automating Feedback Loops & Post-Mortems

Objective: Improve feedback loops and create post-mortem reports.

Step-by-Step:

- Configure pipeline failure alerts via SNS.

- Generate reports with CloudWatch Logs Insights.

- Capture deployment logs and audit trails.

- Share weekly reports via Amazon S3 and CloudFront.

- Conduct retrospectives using data dashboards.

Why These Labs Matter

- Directly aligned with DOP-C02 certification domains.

- Simulate real-world DevOps responsibilities.

- Provide interview-ready experience and GitHub portfolio content.

- Match industry roles like DevOps Engineer, Cloud Automation Engineer, and Site Reliability Engineer.

FAQs

FAQs

Frequently Asked Questions (FAQs)

Preparing for the AWS Certified DevOps Engineer – Professional (DOP-C02) often raises questions. To make things easier, we’ve compiled the most common queries with clear explanations. As a result, you can approach both your certification exam and career goals with confidence.

1. What is the AWS Certified DevOps Engineer – Professional Certification?

The AWS Certified DevOps Engineer – Professional (DOP-C02) is a globally recognized credential from Amazon Web Services. It validates your ability to design, implement, and manage DevOps pipelines on AWS. Furthermore, the certification covers automation, monitoring, deployment, infrastructure as code (IaC), and security. Therefore, it is ideal for IT professionals already working on AWS who want to move into DevOps roles across industries such as SaaS, finance, e-commerce, and cloud consulting.

2. What job roles can I target after completing DOP-C02?

After earning the DOP-C02 certification, you can apply for several high-demand roles. These include:

- AWS DevOps Engineer

- Cloud Automation Engineer

- Site Reliability Engineer (SRE)

- Build and Release Engineer

- Infrastructure Engineer (IaC / CD focused)

In addition, recruiters often expect knowledge of CI/CD on AWS, CloudFormation, CodePipeline, and container orchestration using ECS or EKS.

3. Do I need any prior AWS certifications before doing DOP-C02?

It is strongly recommended, though not mandatory, to complete one of the Associate-level certifications first. For example:

- AWS Certified Developer – Associate (DVA-C02)

- AWS Certified SysOps Administrator – Associate (SOA-C02)

- AWS Certified Solutions Architect – Associate (SAA-C03)

Completing one of these ensures you understand AWS fundamentals. As a result, you’ll find advanced automation and DevOps practices easier to master.

4. Is DOP-C02 theoretical or hands-on?

The DOP-C02 is highly practical. During training you will:

- Build CI/CD pipelines with CodePipeline

- Automate infrastructure with CloudFormation and Terraform

- Secure deployments using IAM and Secrets Manager

- Monitor applications using CloudWatch and X-Ray

- Deploy containers on ECS, EKS, and Fargate

At CloudTech Solutions, the training is project-based. Consequently, you gain confidence with real-world tasks and interviews.

5. How difficult is the DOP-C02 exam?

The DOP-C02 exam is considered intermediate to advanced. It tests your ability to automate workflows, apply DevSecOps practices, troubleshoot pipeline issues, and design scalable systems. However, with structured training, hands-on labs, and mock exams, most learners succeed on their first attempt.

6. What types of questions are asked in the DOP-C02 exam?

You can expect multiple-choice questions, scenario-based MCQs, and case studies. Typically, the exam tests real-world AWS problems such as secure multi-environment deployments, choosing the right service, or rolling back failed releases. In addition, our program includes mock exams, which mirror the actual format.

7. How long does the AWS DevOps Professional course take to complete?

The course usually takes 6–8 weeks for working professionals. In fast-track mode, learners with prior AWS experience may finish in about 4 weeks. Furthermore, we recommend completing all labs, projects, and practice tests before scheduling your exam. You will also receive lifetime access to study materials for revision.

8. How will this certification help me in interviews?

Employers often shortlist candidates with AWS Professional certifications. After completing this training, you will be able to discuss CI/CD pipelines, explain DevOps services like CodePipeline, CloudFormation, and ECS. Moreover, you will gain project-based experience that is highly valued. In addition, CloudTech Solutions provides mock interviews and resume support aligned with industry trends.

9. Is this course suitable for freshers?

The course is best suited for IT professionals with at least one year of AWS experience. Nevertheless, motivated freshers with strong knowledge of AWS basics, Linux, and Git can also attempt it. If you have completed an Associate-level AWS certification, you will find the transition easier. As a result, beginners may start with AWS Associate certifications before moving on to DOP-C02.

10. Does the course cover tools beyond AWS?

Yes. In addition to AWS-native services, the course also covers key DevOps tools such as GitHub Actions, Jenkins, Terraform, Slack, SNS, Docker, Helm, and kubectl. Consequently, you are job-ready for hybrid and multi-cloud environments.

In conclusion, these FAQs clarify the AWS Certified DevOps Engineer – Professional (DOP-C02) journey. From exam details to career benefits, hands-on labs, and real-world projects, you now have a clear roadmap to succeed with confidence.

Join our live, instructor-led sessions

Technical Requirements

- A stable internet connection to ensure uninterrupted live sessions and lab access.

- A laptop or desktop running Windows, Linux, or macOS for hands-on practice.

- A functional microphone for effective participation in interactive sessions.

General Instructions

- All training materials are proprietary; sharing or distributing any course content is strictly prohibited.

- Live sessions run at fixed times and include interactive Q&A, lab walkthroughs, and real-time demos.

- Practical labs are delivered in a browser-based environment—no local setup is required.

- Professional conduct is expected during live sessions and in community forums.

- If unforeseen circumstances arise, the instructor may reschedule sessions with prior notice.

- Doubt clearing and mentoring support are available via WhatsApp, email, or phone for course-related queries.

- Training is offered in small groups as well as one-on-one; contact us for details.